With the Pi 5 being quite powerful & most container images coming around to providing arm64 builds, I set out to build a Pi Cluster. No practical reason, for production use I’d go for more expandable hardware, but they just always seemed super cool to me & I really wanted to have one. It’s been able to handle almost everything I’ve thrown at it (within reason) & is currently running great as an Edge Cluster.

Stuff to Buy

- Some 8GB Raspberry Pi 5s, find a vendor on rpilocator

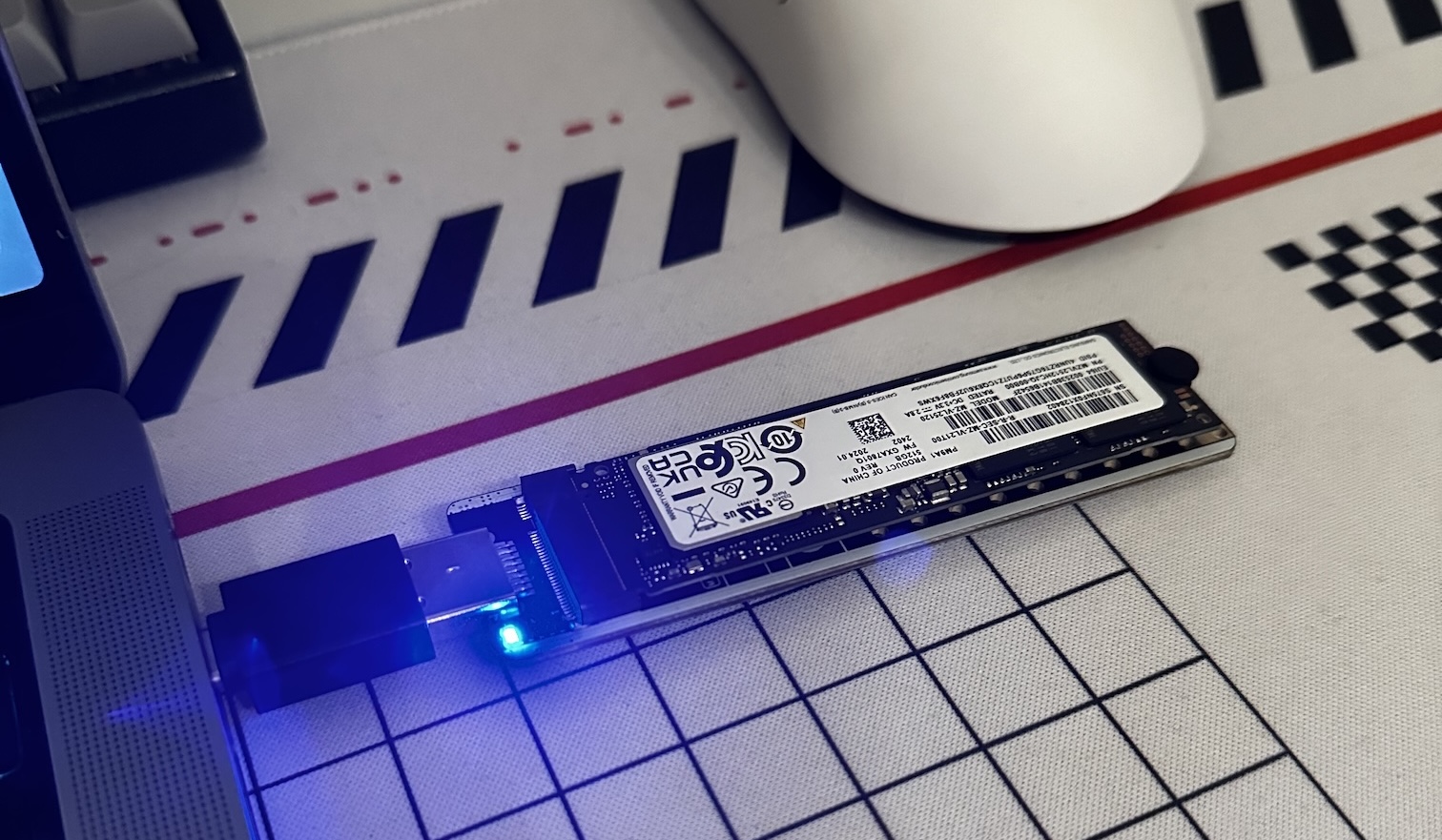

- NVMe adapter boards, I got the one from Pimoroni

- Some drives, I got some OEM Samsung PM9A1s after asking around a bit on r/homelab. Get them for cheap on ebay

- I’m still waiting for the official PoE Hats to come out, for now I just got some Active Coolers

Assembly

I stacked the Pis up with some M2.5 standoffs. Getting the flex cable into the second clip can be a bit tricky. I found that connecting them with the boards next to each other & then folding the Pi over was easiest, kinda like in the image.

Clustering

OS & First Boot

Went with Debian Lite on the Pis, I might do Alpine in the future to save some resources, but it’s been running stable so I didn’t have a reason to yet. Did a fun USB C → USB A → NVMe chain to flash the drives & it’s the fastest I’ve seen Etcher go. The first boot also takes quite a while depending on the size due to partition expansion, so give it some time to boot up.

Jeff Geerling has a great guide on how to set up NVMe boot. Make sure to enable PCIe Gen 3. While it isn’t rated for this speed, I’ve been running Longhorn storage replication under pretty heavy loads for the past seven months & it’s held up great.

Otherwise, make sure you enable ssh & add an ssh key. I also added the following line to my cmdline.txt to provision networking stuff & enable cgroups for Kubernetes. Make sure to adjust it to match your network.

<address> <gateway> <subnet> <hostname>

v v v v

ip=10.0.1.51::10.0.1.254:255.255.255.0:kf-rpi-01:eth0:off cgroup_enable=cpuset cgroup_memory=1 cgroup_enable=memoryPreparation

Depending on the amount of Pis, you’ll now have to decide on a number of controllers & workers. For etcd to maintain consistency quorum is (n/2)+1, so an uneven amount of controllers (n) is required. Since I planned on expanding my Cluster down the line, I started out with three controller nodes. I installed keepalived & HAProxy on them to expose a single IP for the Kubernetes endpoint. For now this just load balances the requests coming in from my laptop (& only those using the admin kubeconfig, which makes this an insanely small set) but future worker nodes will benefit from this already being set up. Just waiting on that PoE module 👁️

Setup

k3s ships as a single binary which makes setting up a Cluster quite easy. There’s a great Ansible Collection k3s-ansible which does most of the heavy lifting like prepping the host & setting up HA. All it requires is an inventory containing the raspberries addresses & a few config args. This could be turning off the local path provisioner by adding --disable=local-storage to your extra arguments. Reference for all flags can be found in the k3s docs, which I suggest browsing a bit, they are well written & have helped me with tons of issues.

k3s_cluster:

children:

server:

hosts:

"kf-rpi-guppy-01":

ansible_ssh_host: "10.0.1.51"

"kf-rpi-guppy-02":

ansible_ssh_host: "10.0.1.52"

"kf-rpi-guppy-03":

ansible_ssh_host: "10.0.1.53"Wrapping up

Once complete, retrieve a kubeconfig from one of the nodes (or through the playbook) & run a quick kubectl get nodes to confirm everything is ready. You can keep an eye on resource usage using kubectl top nodes

Wrapping up, I love the Helm Controller. It ships with k3s per default & allows you to provision HelmCharts as CRDs meaning a lot of cluster setup can be completed with just a few files. k3s-ansible also supports provisioning manifests during cluster setup, which can be setup with the extra_manifests variable. So if you want to replace default k3s components like Traefik with ingress-nginx & cert-manager, this is a super convenient way to do it.

☸️ Happy Clustering!